How to Run LLMs Locally: Complete 2025 Guide to Self-Hosted AI Models

The AI revolution is happening, but you don’t need to send your sensitive data to cloud services or pay monthly subscription fees to benefit from it. Running large language models locally on your own computer gives you complete control over your AI interactions while maintaining absolute privacy and eliminating ongoing costs.

In this comprehensive guide, you’ll discover everything you need to run llms locally, from choosing the right tools and models to optimizing performance on your hardware. Whether you’re a developer seeking coding assistance, a business protecting sensitive data, or an AI enthusiast wanting offline access, local llms offer compelling advantages over cloud-based alternatives.

We’ll walk through the best tools for 2025, hardware requirements that won’t break the bank, and step-by-step tutorials for getting your first local llm running in minutes. By the end, you’ll understand how to harness the power of state of the art language models without compromising your privacy or budget.

What You’ll Learn

- What “running LLMs locally” means and how it works

- The benefits of self-hosted AI vs cloud AI

- The best tools of 2025 (LM Studio, Ollama, GPT4All, Jan, llamafile, llama.cpp)

- Hardware requirements for models from 2B to 70B+ parameters

- How to install and run your first model

- How to create a secure local API server

- Real-world use cases for personal and business workflows

- Performance tips, troubleshooting, and cost comparisons

Introduction to Large Language Models

Large language models (LLMs) are revolutionary artificial intelligence systems that transform how you interact with technology,designed to understand, generate, and manipulate human language with unprecedented sophistication. By training on massive datasets of text, these game-changing large language models deliver coherent, context-aware responses that revolutionize your workflow, making them absolutely essential for an incredible range of applications,from chatbots and virtual assistants to language translation, text summarization, and creative content generation that delights users and drives results.

Running large language models locally on your own computer delivers exceptional advantages that cloud services simply can’t match. When you run LLMs locally, you maintain complete control over your sensitive data, ensuring that confidential information never leaves your device,a privacy-first approach that builds trust. This powerful strategy not only enhances security and peace of mind but also eliminates dependency on external providers and cuts recurring subscription fees to zero. As a result, smart individuals and forward-thinking organizations are choosing to run LLMs locally, leveraging the full power of these models for everything from business automation to personal productivity,without sacrificing security or burning through ongoing costs.

Whether you’re passionate about experimenting with cutting-edge models, building custom AI-powered tools that scale your success, or simply seeking a more private and lightning-fast AI experience, running LLMs locally puts the capabilities of state-of-the-art language models directly in your hands,empowering you to innovate faster, stay secure, and deliver exceptional results.

What Does Running LLMs Locally Mean?

Running large language models locally means operating sophisticated AI models directly on your own computer or local machine instead of relying on cloud services like ChatGPT, Claude, or Gemini. When you run llm locally, the entire inference process happens on your own hardware, with no data transmitted over the internet to external servers.

The core benefits of local llms include complete data privacy, zero subscription costs after initial setup, and offline functionality that works without an internet connection. Your sensitive data never leaves your device, making local inference particularly valuable for businesses handling confidential information, developers working on proprietary code, or individuals concerned about privacy.

Unlike cloud-based AI services that require API keys and charge per request, local models provide unlimited usage once you download the model from repositories or sources such as GitHub or Hugging Face and save the model file to your computer. This creates predictable costs and eliminates concerns about API rate limits or service outages affecting your workflow.

A practical comparison illustrates the difference: when using ChatGPT, your questions travel to OpenAI’s servers for processing before returning responses. With a local llm like Llama 3.2 running on your machine, everything happens on your consumer hardware. While cloud services offer convenience and cutting-edge models, local ai provides privacy, control, and cost predictability that many users find compelling.

Common misconceptions include beliefs that running llms locally requires expensive GPU hardware or complex technical setup. Modern tools like LM Studio and GPT4All have simplified the process significantly, and many smaller models run effectively on standard desktop computers with sufficient RAM.

Setting Up a Local Environment

Getting started with local llms begins with transforming your computer into a powerful AI powerhouse that delivers exceptional performance right at your fingertips. The first step is ensuring your operating system,whether Windows, macOS, or Linux,becomes the perfect foundation for the cutting-edge tools you’ll leverage, such as LM Studio, Ollama, or GPT4All. Each of these game-changing platforms offers a streamlined, user-friendly approach to managing and interacting with local models, making advanced AI accessible to everyone,even those taking their first steps into the exciting world of artificial intelligence.

Next, you’ll want to maximize your hardware potential to unlock incredible performance gains. While many smaller models deliver impressive results on standard desktops or laptops, having a modern CPU, sufficient RAM, and, ideally, a dedicated GPU will supercharge your experience and enable you to run larger, more sophisticated models with remarkable smoothness. By ensuring your system meets the minimum requirements for your chosen tool and model, you’re setting yourself up for unparalleled AI capabilities.

Once your hardware and operating system are perfectly aligned, you can install your preferred tool and watch the magic happen. LM Studio, for example, provides an intuitive graphical interface that makes model management effortlessly simple, while Ollama offers a command line experience that empowers developers with advanced control. After installation, you’ll have the freedom to browse, download, and run compatible models directly on your local machine,giving you complete control over your AI experience.

By carefully selecting the right tool and ensuring your environment is expertly configured, you’ll be equipped with everything you need to run llms locally and harness the full power of the latest advancements in AI. You don’t just get local AI capabilities,you gain total independence, enhanced privacy, and lightning-fast performance that transforms how you work with artificial intelligence.

Quick Start: Best Tools for Running LLMs Locally in 2025

The landscape of tools for running local llms has matured dramatically, offering user-friendly options that eliminate most technical barriers. Here are the top five platforms that make running models locally accessible to users at every skill level, including access to popular models like Llama and DeepSeek R1 for local use:

LM Studio excels as the most beginner-friendly option with its intuitive graphical interface and built-in model browser. Download from lmstudio.ai and enjoy seamless model management across Windows 11, macOS Ventura+, and Ubuntu 22.04+.

GPT4All focuses on privacy-first AI with excellent document chat capabilities through its LocalDocs feature. Available at gpt4all.io for all major operating systems, it offers a curated model marketplace with over 50 compatible models.

Jan provides an open source alternative to ChatGPT with extensible architecture and hybrid local/cloud capabilities. Get started at jan.ai with support for custom extensions and remote API integration.

Ollama serves as the preferred command line tool for developers, offering simple model management and excellent API integration. Installing Ollama is straightforward: download and run the installer for your operating system, then follow the prompts to complete setup. Once you have installed Ollama, you can use the command line tool to manage and run models. A key feature is the pull command, which allows you to download or update specific models directly from the terminal for immediate use.

llamafile delivers portable AI through single-file executables that run anywhere without installation. Perfect for quick testing or deployment scenarios where minimal setup is crucial.

For complete beginners, LM Studio provides the smoothest onboarding experience with its visual interface and automatic GPU acceleration. Developers typically prefer Ollama for its flexibility and integration capabilities with existing development workflows.

These tools are designed to provide a user friendly experience for both beginners and advanced users.

Hardware Requirements for Local LLMs

Understanding hardware requirements helps you choose appropriate models for your system and set realistic performance expectations. The good news is that modern local llms work on a wide range of hardware configurations, from modest laptops to high-end workstations.

Minimum specifications for running smaller models include 16GB RAM, a modern CPU like Intel i5-8400 or AMD Ryzen 5 2600, and at least 50GB available storage. These specs handle models up to 7B parameters with acceptable performance for most use cases.

Recommended specifications for optimal performance include an NVIDIA RTX 4060 with 8GB video ram, 32GB system RAM, and 100GB+ storage for multiple models. This configuration provides smooth inference for larger models and enables running multiple models simultaneously.

Storage requirements vary by model size: smaller models like Phi-3-mini require 2-4GB, while larger models like Llama 3.1 70B need 40-80GB depending on quantization. If you have limited resources, you may want to download the smallest model available, such as Gemma 2B Instruct, to minimize storage and memory usage. Plan for 50-100GB if you want to experiment with multiple models of different sizes.

Here’s a performance comparison showing tokens per second for different hardware configurations:

Hardware Configuration | Phi-3-mini (3B) | Llama 3.1 8B | Mistral 7B | Code Llama 34B |

|---|---|---|---|---|

CPU only (16GB RAM) | 8-12 tokens/sec | 4-6 tokens/sec | 3-5 tokens/sec | Not recommended |

RTX 4060 (8GB VRAM) | 45-60 tokens/sec | 25-35 tokens/sec | 30-40 tokens/sec | 8-12 tokens/sec |

RTX 4090 (24GB VRAM) | 80-120 tokens/sec | 60-80 tokens/sec | 70-90 tokens/sec | 35-45 tokens/sec |

Apple M2 Pro (32GB) | 35-50 tokens/sec | 20-30 tokens/sec | 25-35 tokens/sec | 15-20 tokens/sec |

GPU acceleration significantly improves performance, but CPU-only inference remains viable for smaller models when GPU resources aren’t available. The optimal performance comes from matching model size to your available video ram or system RAM.

Best Open Source Models to Run Locally

Selecting the right model depends on your hardware capabilities, intended use cases, and quality requirements. Open source models have reached impressive quality levels while remaining accessible for local deployment. The growing landscape of open source llm projects, such as Ollama and llama.cpp, highlights the strength of community-driven development and the increasing availability of models released by leading AI organizations.

Small models (under 8GB) offer excellent efficiency for basic tasks:

- Phi-3-mini (3.8B parameters) provides strong reasoning capabilities in a compact 2.3GB package, ideal for limited ram scenarios

- Gemma 2B delivers Google’s training quality in an ultra-lightweight 1.4GB model file

- Llama 3.2 3B offers Meta’s latest architecture optimizations with balanced performance and efficiency

Medium models (8-16GB) strike the best balance between capability and resource requirements:

- Llama 3.1 8B serves as the gold standard for general-purpose tasks with strong reasoning and code generation

- Mistral 7B excels at following instructions precisely and handling complex reasoning tasks

- DeepSeek-Coder 6.7B specializes in code generation with support for 80+ programming languages

Large models (16GB+) provide maximum capability for users with sufficient hardware:

- Llama 3.1 70B offers GPT-4 class performance for complex reasoning and analysis tasks

- Code Llama 34B delivers exceptional coding assistance with deep understanding of software engineering concepts

All models are available through Hugging Face with model IDs like “microsoft/Phi-3-mini-4k-instruct” or “meta-llama/Meta-Llama-3.1-8B-Instruct”. Performance benchmarks show that 8B parameter models typically provide the best value proposition for most users, offering 85-90% of larger model capability while requiring significantly fewer resources.

LM Studio: Easiest Way to Start

LM Studio revolutionizes local ai accessibility by providing a user-friendly graphical interface that abstracts away technical complexity. LM Studio and similar tools offer user interfaces, including graphical and web-based options, that simplify model management and interaction. LM Studio also offers a convenient web ui, allowing users to manage and interact with models directly from their browser. This makes it the ideal starting point for users new to running llms locally.

Begin by downloading LM Studio from lmstudio.ai and following the straightforward installation process for your operating system. The installer automatically configures GPU acceleration when compatible hardware is detected, eliminating manual driver configuration. After installation, launch LM Studio to access the main interface and begin exploring available models.

The main interface presents three key sections: Discover for browsing available models, My Models for managing downloaded models, and Chat for interacting with loaded models. In the Discover tab, use the search bar to quickly find specific models based on your requirements. The built-in model library curates high-quality open source models with clear descriptions and hardware requirements.

Setting up the chat interface involves loading a downloaded model and adjusting generation parameters like temperature and context length. The interface provides intuitive sliders and explanations for each setting, making experimentation accessible to non-technical users.

For developers, LM Studio includes a local api server that exposes OpenAI-compatible endpoints. Enable this feature in the settings to integrate local models with existing applications that support OpenAI’s API format.

Installing Your First Model in LM Studio

Navigate to the Discover tab where you’ll find a searchable library of compatible models. Search for “llama-3.2-3b-instruct” to find Meta’s efficient 3B parameter model that works well on modest hardware.

Click the download button to begin the process. LM Studio displays progress indicators showing download speed and estimated completion time. The download manager handles interruptions gracefully, resuming partial downloads when network connectivity returns.

Once download completes, the model appears in your My Models section. The downloaded model files are managed and stored for easy access and loading. Click to load it into memory, which typically takes 10-30 seconds depending on model size and storage speed. The interface shows memory usage and confirms when the model is ready for interaction.

Test your installation with sample prompts like “Explain quantum computing in simple terms” or “Write a Python function to calculate fibonacci numbers”. The model should respond within seconds, confirming successful setup.

Common troubleshooting for download failures includes checking available disk space, verifying internet connection stability, and ensuring your firewall allows LM Studio network access. The built-in logs provide detailed error information for resolving issues.

GPT4All: Privacy-Focused Local AI

GPT4All emphasizes privacy and ease of use, making it an excellent choice for users prioritizing data security. The application runs entirely offline once models are downloaded, ensuring your conversations never leave your device.

Download GPT4All from gpt4all.io and install on Windows, macOS, or Linux. The installation process automatically downloads a starter model to ensure immediate functionality. First launch presents a clean interface with clear navigation between chat, models, and settings. After installation, you can prompt the models to generate text for a variety of tasks, such as answering questions or creating content.

The model marketplace offers 50+ curated models with detailed descriptions, hardware requirements, and user ratings. Models are categorized by size and specialty, helping users select appropriate options for their use cases and hardware constraints.

GPU acceleration setup varies by platform but generally involves installing CUDA drivers for NVIDIA graphics cards or ensuring Metal support on macOS. The settings panel provides clear instructions and automatic detection for compatible hardware configurations.

Setting Up LocalDocs for Document Chat

LocalDocs represents GPT4All’s standout feature, enabling private conversations with your personal documents without uploading content to external servers. This functionality transforms local llms into powerful research and analysis tools.

Access LocalDocs through the dedicated tab and add local folders containing PDFs, text files, markdown documents, or code repositories. The system supports common formats including .pdf, .txt, .md, .docx, and source code files.

The indexing process analyzes document contents to create searchable embeddings stored locally on your device. Indexing time depends on document volume but typically processes hundreds of pages within minutes. Progress indicators show completion status and estimated remaining time.

Example queries against indexed documents might include “Summarize the key findings from my research papers” or “What coding patterns appear most frequently in my projects?”. The system retrieves relevant document sections before generating responses, providing grounded answers with source citations.

Privacy benefits include complete offline processing with no data transmission to external services. Your documents remain on your local machine throughout the entire process, making LocalDocs suitable for confidential business documents or personal research materials.

Jan: Open Source ChatGPT Alternative

Jan positions itself as a comprehensive open source alternative to commercial AI chat services, offering familiar interfaces with the flexibility of open source development. The platform supports both local inference and hybrid cloud integration for maximum flexibility.

Installation from jan.ai requires verifying system requirements including sufficient RAM and storage space. The installer automatically detects hardware capabilities and suggests optimal configuration settings for your specific setup.

The interface tour reveals a ChatGPT-inspired design with modern UI elements and intuitive navigation. Conversation history, model switching, and settings access follow familiar patterns that reduce learning curves for users transitioning from commercial services.

Model import capabilities allow bringing models from other tools like LM Studio or Ollama, avoiding redundant downloads. Jan supports importing any compatible large language model for local or hybrid use. The system automatically detects compatible model formats and converts them as needed for optimal performance.

The extension marketplace adds functionality through community-developed plugins covering areas like enhanced model management, specialized chat modes, and integration with external tools and services.

Remote API integration enables hybrid deployments where some requests use local models while others leverage cloud services based on complexity or performance requirements. This approach optimizes costs while maintaining local capabilities for sensitive tasks.

Ollama: Developer-Friendly Command Line Tool

Ollama excels as a command line tool designed specifically for developers who prefer programmatic control and integration capabilities. Its simple yet powerful interface makes model management and deployment straightforward for technical users.

Installation varies by operating system but typically uses package managers like Homebrew on macOS (brew install ollama), apt on Ubuntu (sudo apt install ollama), or winget on Windows (winget install ollama). These methods ensure proper dependency management and system integration.

After installation, users can interact with Ollama through specific terminal commands for downloading, running, and managing models, making it easy to operate entirely from the command line.

Essential commands provide comprehensive model lifecycle management:

- ollama pull llama3.1:8b downloads models from the official library

- ollama run llama3.1:8b starts interactive chat sessions with specified models

- ollama list displays all installed models with sizes and modification dates

- ollama rm model-name removes models to free storage space

Ollama can be configured as a local server or local inference server, allowing you to host and serve models locally for integration with other applications. This setup enables easy customization, improved performance, and seamless troubleshooting support.

Creating custom models through Modelfile enables fine-tuning model behavior, system prompts, and parameters. This text-based configuration approach integrates well with version control and automation workflows.

Integration with development tools includes plugins for popular IDEs like VS Code, enabling code generation and analysis directly within development environments. The standardized API format simplifies integration with existing applications and services.

Running Multiple Models with Ollama

Ollama’s architecture supports concurrent model execution, allowing different models to serve specialized tasks simultaneously. This capability enables sophisticated workflows where smaller models handle basic tasks while larger models tackle complex reasoning.

Switching between models requires simple command syntax like ollama run mistral:7b followed by ollama run codellama:7b in separate terminal sessions. Each model maintains independent conversation context and memory allocation.

Memory management automatically handles resource allocation based on available system resources and model requirements. The system provides warnings when memory constraints might impact performance and suggests optimization strategies.

API server setup through ollama serve exposes models via HTTP endpoints compatible with OpenAI’s format. This enables seamless integration with applications designed for cloud AI services, running entirely on local infrastructure.

Docker deployment facilitates production environments through official Ollama containers. The containerized approach ensures consistent behavior across development, staging, and production environments while simplifying dependency management.

Advanced Tools: llama.cpp and llamafile

Advanced users seeking maximum control and performance optimization benefit from lower-level tools like llama.cpp and llamafile. To run models with llama.cpp, users need to download a gguf model file, which is the required format for local deployment. These tools sacrifice convenience for flexibility and efficiency, making them ideal for production deployments and specialized requirements.

The decision between user-friendly applications and advanced tools depends on specific needs. Choose advanced tools when requiring custom compilation options, specialized hardware support, or integration into larger systems where full control over the inference engine is necessary. Users can also run fine tuned models for specific tasks or domains, achieving optimal performance tailored to their requirements.

Compiling llama.cpp with GPU support involves configuring build systems for specific hardware targets. CUDA support requires NVIDIA drivers and toolkit installation, Metal support works automatically on macOS with Apple Silicon, and OpenCL provides broader GPU compatibility across vendors.

Performance optimization through advanced tools includes custom quantization schemes, memory mapping optimizations, and specialized attention implementations. These optimizations can significantly improve inference speed and reduce memory requirements compared to general-purpose solutions.

llamafile executables provide portable AI deployment by packaging models and inference engines into single files that run without installation. This approach simplifies deployment scenarios where traditional installation processes aren’t feasible or desirable.

Model quantization techniques available through advanced tools include 4-bit, 8-bit, and mixed-precision formats that reduce model size while preserving most performance. Users can experiment with different quantization schemes to find optimal balances for their specific use cases.

Creating a Local API Server

A local API server delivers the ultimate integration solution for your llm model, providing seamless connectivity with other applications while maintaining complete control over your data and infrastructure. Both LM Studio and Ollama offer powerful, straightforward deployment options that put enterprise-grade capabilities directly in your hands, whether you prefer intuitive graphical interfaces or command line precision.

Getting started means choosing your preferred deployment strategy,LM Studio or Ollama,and installing it on your infrastructure. Once deployed, you’ll download the llm model that perfectly matches your hardware capabilities and business requirements, ensuring optimal resource utilization. Configure critical performance parameters like context length and unlock GPU acceleration capabilities when your system supports it, delivering the high-performance results your applications demand.

Launching your local API server couldn’t be simpler: LM Studio provides server activation through an intuitive settings interface, while Ollama offers terminal-based control for maximum operational flexibility. Your API server operates on a dedicated port, ready to process requests from your applications and deliver generated text responses with enterprise-level reliability and speed.

With your local API server operational, you gain the freedom to build custom chatbots, automate complex workflows, and integrate advanced language capabilities directly into your software ecosystem,all while maintaining complete data security and ensuring your llm model operates entirely within your controlled environment. This is more than just a technical setup; it’s your gateway to scalable, secure, and sophisticated language processing capabilities.

Securing Your Local LLM with an API Key

Securing access to your local llm isn’t just essential,it’s the foundation that transforms your AI deployment from a potential vulnerability into a fortress of controlled innovation. When you’re connecting multiple applications or users, implementing an api key system becomes your game-changing strategy, ensuring that only authorized requests can unlock the power of your model while keeping unauthorized access at bay.

Transform your security approach by generating unique api keys for each application or user that will harness your local llm’s capabilities. Store these digital keys like precious assets in environment variables or encrypted configuration files, preventing any accidental exposure that could compromise your competitive advantage. Configure your local api server to demand api key validation on every single request, creating an impenetrable barrier that blocks unauthorized access attempts before they can even knock on your door.

Elevate your security strategy by regularly rotating your api keys to slash the risk of any potential breach, and take decisive action to revoke keys that are no longer needed or may have been compromised. By embracing these industry-leading practices, you don’t just maintain control,you establish complete dominance over your local llm, safeguarding both your valuable model and every piece of sensitive data it processes with uncompromising precision.

Practical Applications and Use Cases

Running llms locally enables numerous practical applications across professional and personal contexts. The combination of privacy, unlimited usage, and offline capabilities opens possibilities that cloud services cannot provide.

Code generation and debugging represent primary use cases for local ai. Models like DeepSeek-Coder and Code Llama excel at understanding programming contexts, generating boilerplate code, explaining complex algorithms, and suggesting bug fixes across 80+ programming languages.

Content creation workflows benefit from local models’ unlimited generation capabilities. Blog posts, emails, marketing copy, and social media content can be generated iteratively without API costs or rate limits. The ability to fine tune local models on specific writing styles adds personalization impossible with cloud services.

Data analysis and summarization tasks leverage local models’ ability to process sensitive information without external transmission. Financial reports, legal documents, medical records, and proprietary research can be analyzed while maintaining complete confidentiality.

Language translation without external services provides privacy for sensitive communications while supporting dozens of language pairs. Local models handle technical documentation translation, multilingual customer support, and international business communications entirely offline.

Real-world examples include law firms using local models for document analysis, software companies implementing AI-powered coding assistants, and content creators developing personalized writing tools. Each of these solutions runs locally on the user’s hardware, ensuring privacy and control. These applications demonstrate the versatility and practical value of local ai deployment.

Performance Optimization and Troubleshooting

Maximizing performance from local llms requires understanding system resources, model characteristics, and optimization techniques. Proper configuration can dramatically improve response times and enable larger models on modest hardware.

GPU acceleration setup differs by vendor but generally involves installing appropriate drivers and configuring software to recognize available hardware. NVIDIA users need CUDA toolkit installation, while AMD users require ROCm setup on supported Linux distributions.

Model quantization reduces memory requirements by storing model parameters at lower precision levels. 4-bit quantization typically reduces model size by 75% while maintaining 95%+ quality, making large models accessible on consumer hardware with limited video ram.

Common error messages and their solutions include:

- “CUDA out of memory”: Reduce model size, close other applications, or enable CPU offloading

- “Model loading failed”: Verify model file integrity and sufficient disk space

- “Slow inference speed”: Check GPU acceleration settings and consider model quantization

Resource monitoring during inference helps identify bottlenecks and optimize configurations. Task Manager on Windows, Activity Monitor on macOS, or htop on Linux reveal CPU utilization, memory usage, and GPU activity patterns during model execution.

Temperature and sampling parameter adjustments affect output quality and speed. Lower temperatures produce more consistent outputs, while higher values increase creativity. Top-k and top-p sampling parameters balance response diversity with coherence.

Context length optimization balances memory usage with conversation capability. Longer contexts enable more sophisticated interactions but require proportionally more memory. Most use cases work well with 2048-4096 token contexts.

Best Practices for Local LLM Setup

To unlock maximum value from your local llm, you need a winning strategy that delivers both peak performance and bulletproof security. Start by selecting the perfect model for your unique needs,dive deep into model parameters, size specifications, and targeted applications to discover the ideal match for your hardware capabilities and specific use case requirements.

Supercharge your setup by fine-tuning critical model parameters like context length and activating GPU acceleration wherever possible to achieve game-changing performance levels. Ensure your operating system delivers flawless compatibility with your chosen tools and llm models, while keeping your entire system and software stack current to harness the latest breakthrough features and cutting-edge security enhancements.

Stay ahead of bottlenecks by actively monitoring your system resources, tracking RAM and GPU utilization to prevent performance roadblocks, especially when deploying larger models or running multiple models in parallel. Transform your workflow with intuitive graphical interfaces like LM Studio or GPT4All for an effortless user experience that makes model management and settings optimization incredibly simple.

Protect what matters most,always maintain sensitive data within your local environment and never risk transmitting confidential information across internet channels. Continuously test and evaluate different models to guarantee you’re leveraging the optimal solution for your specific application, and embrace the flexibility to fine-tune or pivot to new models as your requirements grow and evolve.

By implementing these proven best practices, you’ll create a local llm environment that’s secure, lightning-fast, and precisely calibrated to deliver outstanding results that exceed your unique requirements and drive exceptional outcomes.

Cost Analysis: Local vs Cloud AI Services

Understanding the economics of local versus cloud AI services helps make informed decisions about infrastructure investments. The analysis involves upfront hardware costs, ongoing expenses, and break-even calculations based on usage patterns.

Upfront hardware investment for capable local ai systems ranges from $800-1,500 for mid-range configurations to $3,000-5,000 for high-end setups. These costs include modern CPUs, sufficient RAM, capable GPUs, and adequate storage for multiple models.

Monthly subscription costs for cloud AI services vary widely: ChatGPT Plus costs $20/month, Claude Pro costs $20/month, and API usage can range from $10-500+ monthly depending on volume. Enterprise plans often exceed $100/month per user.

Break-even analysis reveals that moderate to heavy users typically recover hardware investments within 6-18 months. Users processing sensitive data or requiring 24/7 availability often justify local infrastructure regardless of pure cost considerations.

Energy costs for running local models continuously add approximately $30-100 monthly to electricity bills, depending on hardware efficiency and local utility rates. Modern GPUs include power management features that reduce consumption during idle periods.

Total cost of ownership calculations over 2-3 years generally favor local solutions for:

- Users with moderate to heavy AI usage patterns

- Organizations requiring data privacy compliance

- Applications needing guaranteed availability

- Teams wanting unlimited experimentation capability

Cloud services remain economical for:

- Occasional users with minimal monthly volume

- Teams requiring cutting-edge model access

- Organizations without IT infrastructure expertise

- Applications needing seamless scaling capabilities

The decision often involves non-financial factors including privacy requirements, data sovereignty, internet connectivity reliability, and organizational control preferences that tip the balance toward local deployment despite higher initial costs.

Local large language models represent a fundamental shift toward democratized, private, and cost-effective AI deployment. As models become more efficient and tools more user-friendly, the barrier to entry continues decreasing while capabilities expand rapidly.

Whether you’re a developer seeking coding assistance, a business protecting sensitive data, or an enthusiast exploring AI possibilities, running llms locally provides unprecedented control over your AI experience. Start with user-friendly tools like LM Studio or GPT4All, experiment with different models to find your ideal balance of capability and performance, and gradually expand your setup as needs evolve.

The future of AI belongs not just in massive data centers, but on your own hardware, under your complete control. Download your first local model today and experience the freedom of self-hosted artificial intelligence.

Introduction to Local AI

Local AI is revolutionizing the way individuals and organizations harness artificial intelligence by bringing the full power of large language models directly onto your own computer. Instead of depending on cloud-based services, running llms locally means that all processing happens on your device, giving you complete control over model parameters and how your sensitive data is handled. This approach doesn’t just enhance privacy—since your data never leaves your machine—but also slashes latency, making responses faster and more reliable than ever before.

With local ai, you can fine tune large language models to fit your unique needs, whether you’re optimizing for specific tasks or experimenting with different configurations. Running llms locally empowers you to customize models, manage updates, and deploy solutions tailored perfectly to your workflow, all while keeping your information completely secure. As more users discover the game-changing value of local deployment, the ecosystem of tools and models continues to expand rapidly, making it easier than ever to harness the capabilities of state of the art large language models llms right on your own computer.

Getting Started with Local LLMs

Launching your local LLM journey has never been more accessible—thanks to game-changing tools and an expanding ecosystem of powerful models at your fingertips. Start by selecting a platform like LM Studio or Ollama, both engineered to simplify and streamline the process of running LLMs directly on your machine. These solutions deliver user-friendly experiences tailored to your preferences—LM Studio with its intuitive graphical interface and Ollama with its efficient command line approach—so you can choose the workflow that perfectly matches your technical comfort zone.

After installing your preferred platform, leverage the integrated search functionality to effortlessly browse available models from trusted repositories like Hugging Face. Download your chosen model file straight to your local setup, with guaranteed hardware compatibility built right in. Once configured, you can activate the local inference server, empowering you to interact with your model through either the graphical interface or command line operations. This powerful setup delivers the flexibility to experiment with multiple models, efficiently manage your local LLM ecosystem, and enjoy the complete benefits of local processing without any dependence on external cloud infrastructure.

Setting Up the Local Inference Server

A local inference server is the game-changing backbone of running llms locally, empowering you to deploy, manage, and interact with your chosen models in a dramatically efficient and secure environment. Revolutionary tools like LM Studio and Ollama make setting up a local inference server incredibly streamlined—even users completely new to AI can achieve powerful results. To unleash this potential, simply select your desired model file and configure essential parameters such as context length, and when available, enable GPU acceleration for explosive performance gains.

Ollama delivers advanced features like GPU acceleration, which can dramatically accelerate model inference on compatible hardware—transforming your workflow entirely. You gain complete control by specifying the exact port for your inference server, making it effortlessly accessible via web ui or seamlessly integrating with other applications for maximum flexibility. LM Studio offers an equally streamlined setup, empowering you to manage models and server settings through an intuitive, user-friendly interface. With your local inference server operational, you’ll command a powerful, completely private environment for running llms locally and leveraging the full, unrestricted capabilities of your chosen models.

Running LLMs Locally with Popular Tools

Choosing the right tool is the key to unlocking a seamless experience when you’re running llms locally. LM Studio, Ollama, and GPT4All stand among the most trusted solutions, each delivering unique capabilities designed to fit your specific workflow needs. LM Studio empowers you with its intuitive graphical interface, making it effortless for you to manage multiple models, switch between them seamlessly, and fine tune settings to achieve optimal results that matter to your projects. For those who thrive in terminal environments, Ollama delivers a robust command line experience that supports your advanced workflows and integrates flawlessly with your development ecosystem.

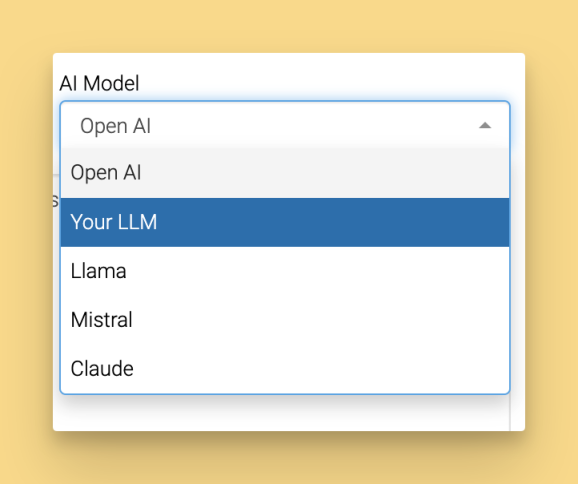

GPT4All represents another powerful choice in your toolkit, supporting an extensive range of models—including popular options like Mistral 7B—while offering you a streamlined interface for interacting with your local ai. These platforms don’t just run models; they empower you to set up api servers effortlessly, enabling seamless integration with your existing applications and services. Whether you’re managing multiple models, experimenting with fine tuning, or just beginning your journey with local llms, these platforms provide the flexibility and power you need to maximize your local ai potential.

Creating a Local API Server

Setting up a local api server is the ultimate game-changer for anyone looking to revolutionize their large language model integration into applications and workflows! With powerful tools like LM Studio and Ollama, creating your personalized local api server becomes incredibly straightforward: simply specify your chosen model file, set your secure api key for maximum protection, and configure the server to run on your preferred port. This cutting-edge setup empowers you to access your models through an intuitive web ui or programmatically via the api server, unlocking unlimited practical applications that transform how you work.

Ollama delivers seamless api server integration straight out of the box, making it effortless to connect your local llms to other tools and platforms for maximum efficiency. LM Studio offers equally impressive capabilities, allowing you to manage your local api server through a beautifully user-friendly interface that puts you in complete control. By creating your own local api server, you gain unparalleled flexibility to deploy models in real-world scenarios, automate complex tasks, and build custom solutions that perfectly fit your needs—all while keeping your valuable data completely secure and under your absolute control. Whether you’re developing groundbreaking applications or enhancing existing workflows, a local api server is your key to unlocking the extraordinary potential of your local ai infrastructure.